使用Python3和Scrapy进行网站图片爬虫自动下载

本文主要介绍如何安装和使用Scrapy进行指定网站图片的爬虫,包含python3、Scrapy、PyCharm CM工具的安装以及提供一个实例进行图片爬虫项目的实际开发。

工具/原料

Ubuntu 18

Python3

电脑需要连接互联网

Scrapy开发环境的安装准备

1、在Ubuntu系统上安装python3hxb@lion:~$ sudo apt-get install python3

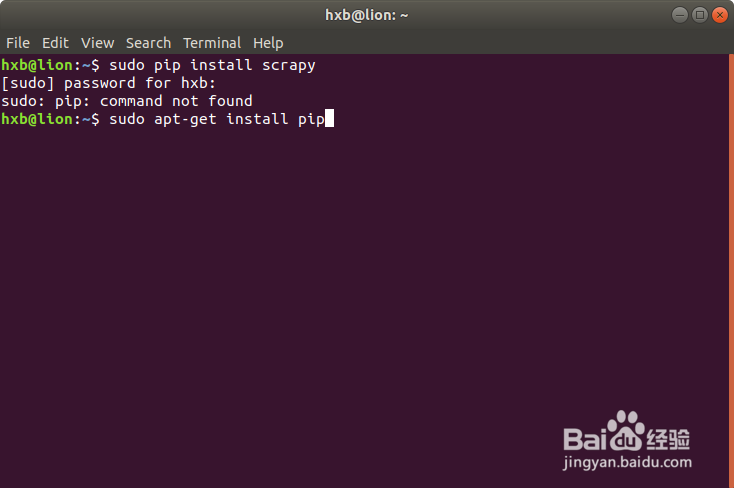

3、安装pip包,用于安装scrapy需要依赖的相关python库hxb@lion:~$ sudo apt install python-pipQuery the Pip version:hxb@lion:~$ pip -Vpip 9.0.1 from /usr/lib/python2.7/dist-packages (python 2.7)

3、通过以下这里可以灵活实现python2和python3谪藁钴碳环境的灵活切换sudo update-alternatives --config pythonhxb@lion:~$ sudo updat髫潋啜缅e-alternatives --config pythonThere are 2 choices for the alternative python (providing /usr/bin/python). Selection Path Priority Status------------------------------------------------------------ 0 /usr/bin/python3 150 auto mode* 1 /usr/bin/python2 100 manual mode 2 /usr/bin/python3 150 manual modePress <enter> to keep the current choice[*], or type selection number: 2update-alternatives: using /usr/bin/python3 to provide /usr/bin/python (python) in manual modehxb@lion:~$ python -VPython 3.6.5

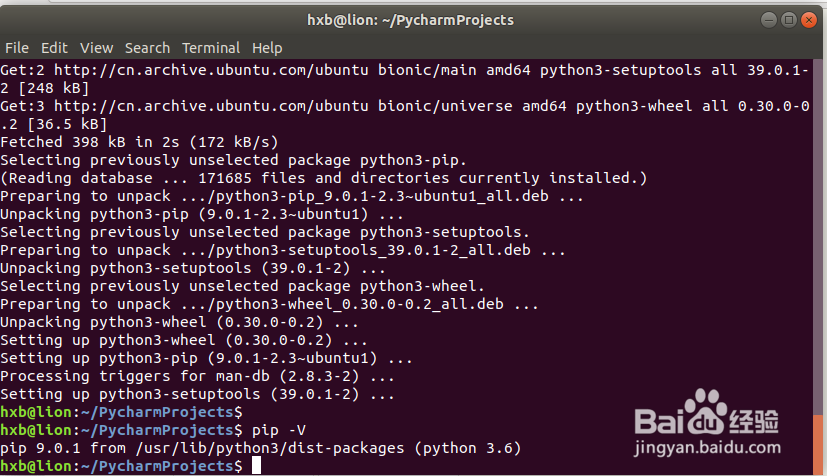

5、安装pip for python3hxb@lion:~/PycharmProjects$ sudo apt-get install python3-pipReading package lists... DoneBuilding dependency tree Reading state information... DoneThe following additional packages will be installed: python3-setuptools python3-wheelSuggested packages: python-setuptools-docThe following NEW packages will be installed: python3-pip python3-setuptools python3-wheel0 upgraded, 3 newly installed, 0 to remove and 1 not upgraded.Need to get 398 kB of archives.After this operation, 2,073 kB of additional disk space will be used.Do you want to continue? [Y/n] yGet:1 http://cn.archive.ubuntu.com/ubuntu bionic-updates/universe amd64 python3-pip all 9.0.1-2.3~ubuntu1 [114 kB]Get:2 http://cn.archive.ubuntu.com/ubuntu bionic/main amd64 python3-setuptools all 39.0.1-2 [248 kB]Get:3 http://cn.archive.ubuntu.com/ubuntu bionic/universe amd64 python3-wheel all 0.30.0-0.2 [36.5 kB]Fetched 398 kB in 2s (172 kB/s) Selecting previously unselected package python3-pip.(Reading database ... 171685 files and directories currently installed.)Preparing to unpack .../python3-pip_9.0.1-2.3~ubuntu1_all.deb ...Unpacking python3-pip (9.0.1-2.3~ubuntu1) ...Selecting previously unselected package python3-setuptools.Preparing to unpack .../python3-setuptools_39.0.1-2_all.deb ...Unpacking python3-setuptools (39.0.1-2) ...Selecting previously unselected package python3-wheel.Preparing to unpack .../python3-wheel_0.30.0-0.2_all.deb ...Unpacking python3-wheel (0.30.0-0.2) ...Setting up python3-wheel (0.30.0-0.2) ...Setting up python3-pip (9.0.1-2.3~ubuntu1) ...Processing triggers for man-db (2.8.3-2) ...Setting up python3-setuptools (39.0.1-2) ...

6、检查python3环境下pip安装是否正常hxb@lion:~/PycharmProjects$ pip -Vpip 9.0.1 from /usr/lib/python3/dist-packages (python 3.6)hxb@lion:~/PycharmProjects$

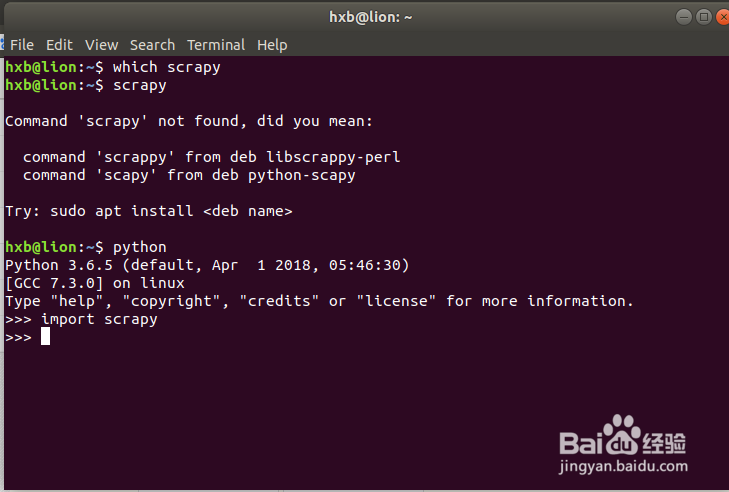

9、在安装scrapy前增加sudo权限可以解决步骤8的错误:sudo -H pip 坡纠课柩install scrapyhxb刺胳挤萧@lion:~/PycharmProjects$ scrapyCommand 'scrapy' not found, did you mean: command 'scapy' from deb python-scapy command 'scrappy' from deb libscrappy-perlTry: sudo apt install <deb name>you should install scrapy with sudohxb@lion:~$ sudo -H pip install scrapySuccessfully installed Automat-0.7.0 PyDispatcher-2.0.5 Twisted-18.4.0 attrs-18.1.0 cffi-1.11.5 constantly-15.1.0 cryptography-2.2.2 cssselect-1.0.3 hyperlink-18.0.0 incremental-17.5.0 lxml-4.2.3 parsel-1.5.0 pyOpenSSL-18.0.0 pyasn1-0.4.3 pyasn1-modules-0.2.2 pycparser-2.18 queuelib-1.5.0 scrapy-1.5.0 service-identity-17.0.0 w3lib-1.19.0 zope.interface-4.5.0hxb@lion:~$

11、安装scrapy依赖的其他python库:hxb@lion:~/PycharmProjects$ sudo apt-get install python-dev python-pip libxml2-dev libxslt1-dev zlib1g-dev libffi-dev libssl-dev

创建Python工程并测试环境是否就绪

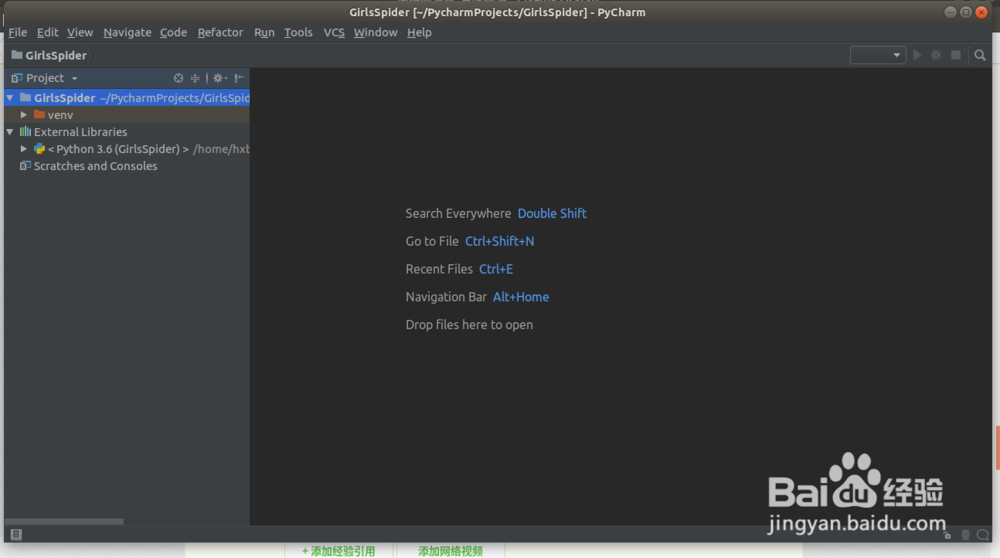

1、在PyCharm CM开发工具中创建一个Python3的工程New Python Project "GirlsSpider" with python3

创建Scrapy框架工程

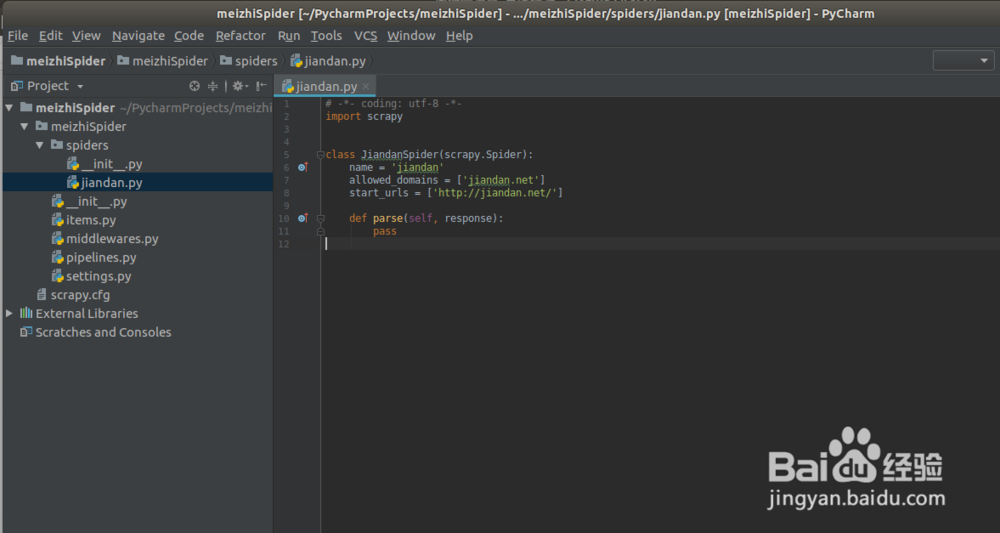

1、使用Scrapy指令创建爬虫项目框架:hxb@lion:~/PycharmProjects$ scrapy startproject meizhiSpiderNewScrapy project 'meizhiSpider', using template directory '/home/hxb/.local/lib/python3.6/site-packages/scrapy/templates/project', created in: /home/hxb/PycharmProjects/meizhiSpiderYou can start your first spider with: cd meizhiSpider scrapy genspider example example.comhxb@lion:~/PycharmProjects$

2、安装一下顺序编写代码:1. jiandan.py:spider code in jiandan.py refer to the following picture2. item.py:define the items for the scrapy result3. pipelines.py: save the scrapy result4. settings.py: settings for scrapy

3、运行爬虫,可以看到我们的爬虫正在工作,不断下载图片到本地1. run the scrapy:hxb@lion:~/PycharmProjects/meizhiSpider/meizhiSpider$ scrapy crawl jiandan1. image files were saved in the directory: /home/hxb/jiandan